In this story, 我们将探索如何使用plain将旧的REST接口转换为Chatbot ChatGPT Function calling. There are lots of data centric REST API’s out there and many of them are used in the context of websites to list or to perform searches on all types of datasets. 这里的主要思想是基于现有REST接口创建基于聊天的体验. So we are going to add a conversational layer powered by ChatGPT on top of an existing REST interface which performs searches on an event database.

我们还将探索如何从头创建聊天机器人UI Python based server. 因此,我们不会使用任何预构建的开源库来构建UI. The server will be written in Python and the web based user interface will be written using Typescript and React.

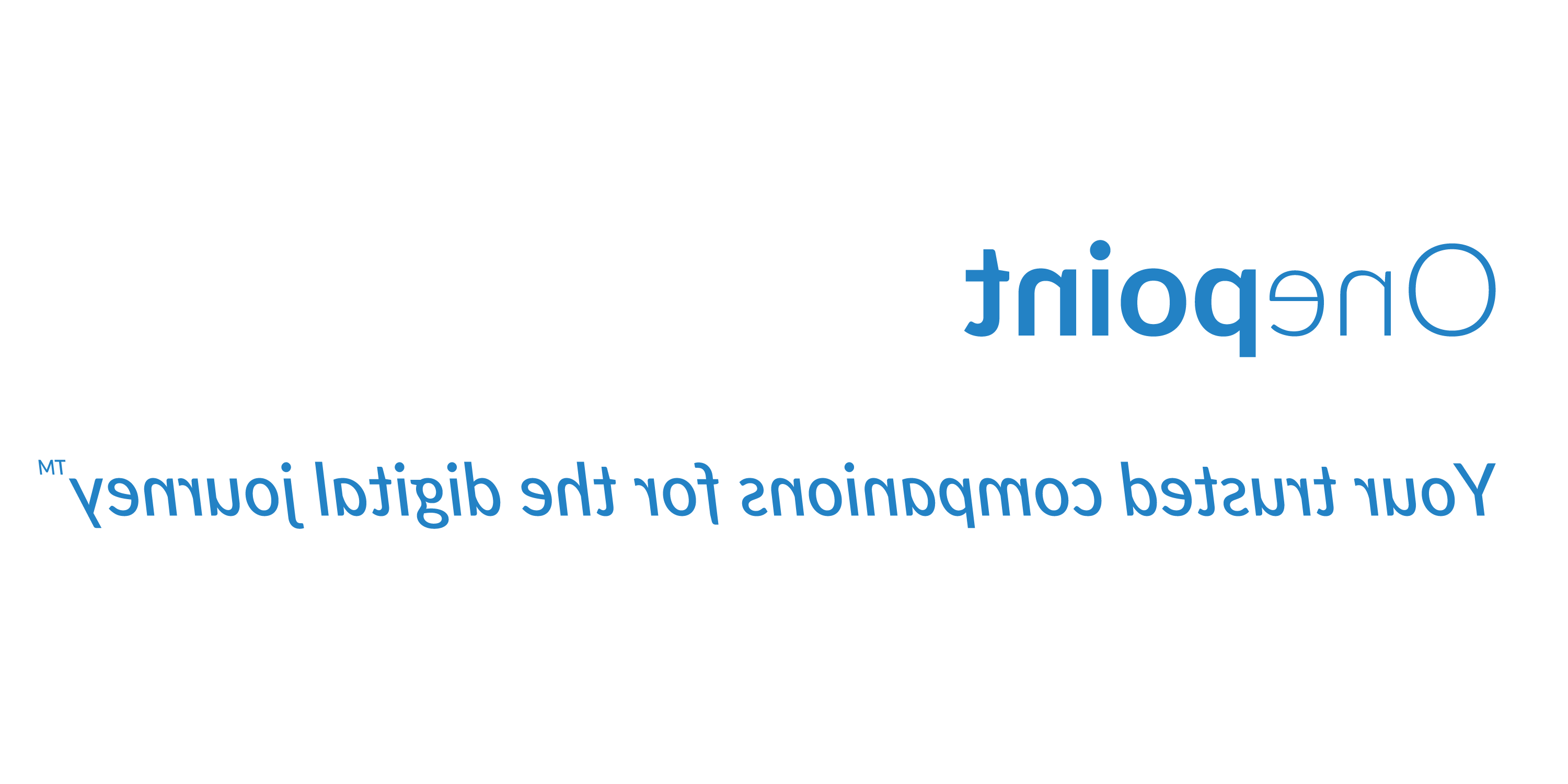

Event Chat — A REST Conversion Project

为了理解如何将REST接口转换为聊天机器人, we have built a small event chat application. This application receives a user question about upcoming events and answers the questions with a list of details about the events including images and links.

Event Chat screenshot

This application supports streaming and replies with external links and images that are stored in a database.

Application Architecture

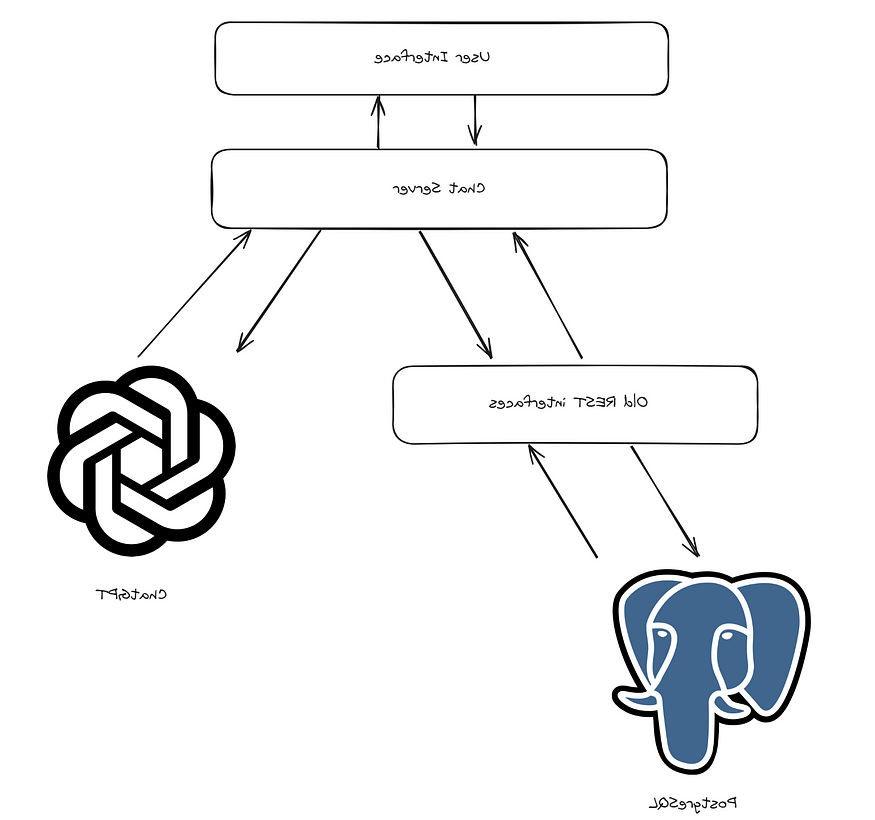

下图是我们构建的应用程序的粗略架构:

High Level components

We have the following components in this application:

User interface written using the React framework using Typescript. 用户界面使用web套接字与聊天服务器通信. The input is just a question about some events.

A chat server written in Python containing an http server that also uses websockets to communicate with the client. The chat server orchestrates the interaction with ChatGPT and old school REST interfaces to answer the user questions.

ChatGPT which analyses the user question and routes it to the correct function and then receives the results from the REST interface to produce the final output.

REST接口,用于搜索事件,并用一些额外的数据丰富检索到的事件

为REST接口提供数据的PostgresSQL数据库. Actually we search using a Lucene index, but the data in it comes from a PostgresSQL database.

Application Workflow

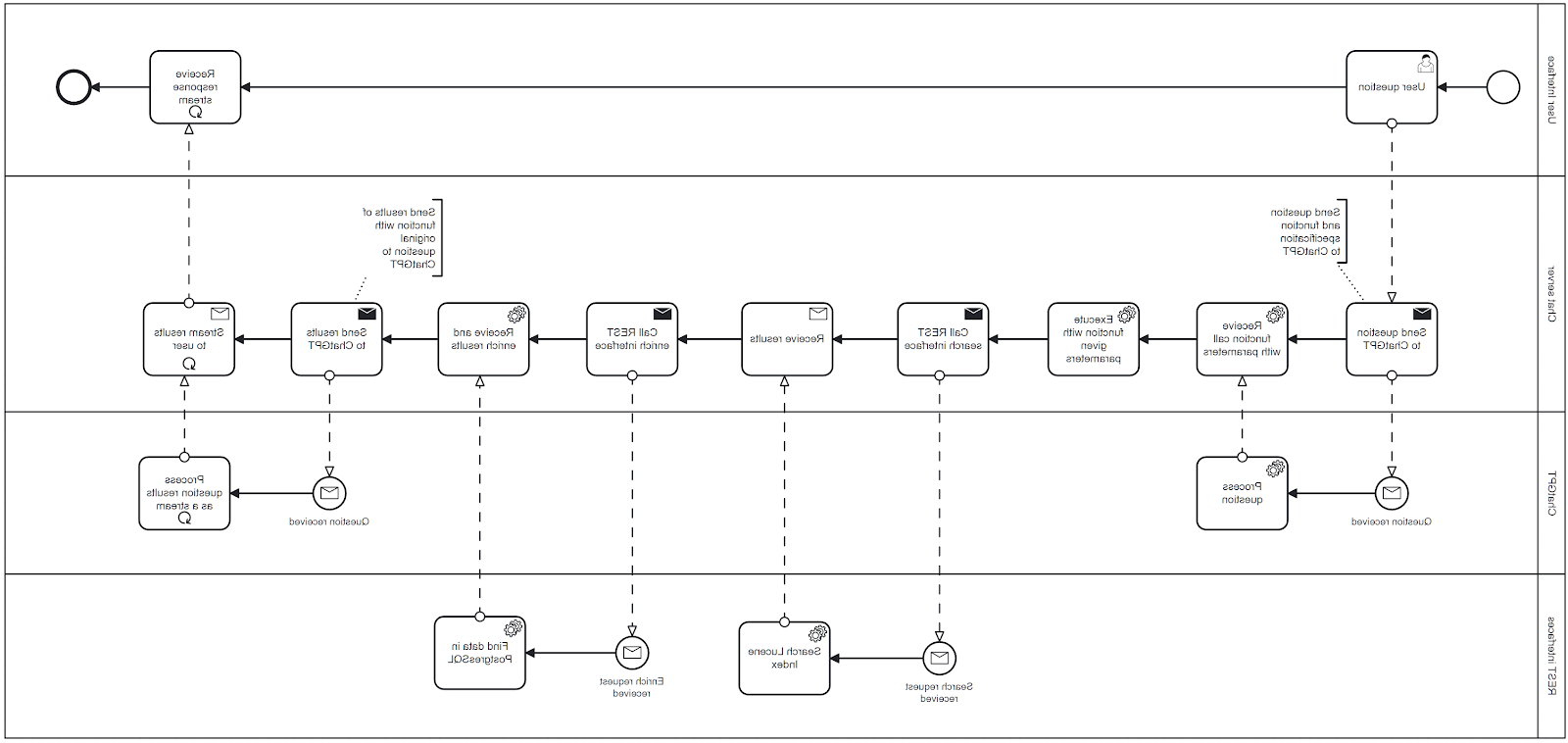

这是处理单个聊天请求的工作流:

Single event request workflow

Single event request workflow

请注意,我们在图中省略了错误边界.

The workflow has 4 participants (pools):

只提出问题并接收对问题的响应的用户.

在所有其他参与者之间协调大部分操作的聊天服务器.

ChatGPT which receives the initial questions, figures out the function calls and receives the results of function calls to format these into natural language.

REST Interfaces — there are only two of them: one is a simple search interface and then there is a second which enriches the initial response with URLs of web pages and images.

Chat Server Workflow in Detail

The main actions happen in the chat server layer. 以下是全球最大的博彩平台这个池的工作流程的细节(仅限快乐路径):

Initially it receives the question from the client and that should typically be a question about events.

问题与函数调用请求一起发送到ChatGPT 3.5 (gpt-3.5-turbo-16k-0613)

If no error occurs, ChatGPT接收函数名和从用户问题中提取的参数.

The function specified by ChatGPT is called (at this stage, this is only an event search REST interface).

结果以JSON格式从REST接口返回.

对REST接口的第二次调用恰好丰富了当前数据. 这个调用的目的是用URL和图像来丰富初始搜索结果.

ChatGPT is then called a second time with the reference to the previously called function and the enriched data

ChatGPT使用自然语言处理JSON数据后进行回复. The results are actually streamed to the client. 一旦ChatGPT生成令牌,它就会通过websockets流传输到客户端.

How does Function Calling work?

Function calling has been introduced in June 2023 to help developers to receive structured JSON with descriptions of locally callable functions. 事实证明,函数调用非常适合集成 ChatGPT 使用REST接口,因为它有助于提高ChatGPT的输出可靠性.

When you use function calling with ChatGPT models like e.g. gpt-3.5-turbo-16k-0613或gpt-4-0613您最初发送消息, the specification of a function and a prompt. 在理想的场景中,ChatGPT使用要调用的函数进行响应.

In a second step you call the function specified by ChatGPT with the extracted parameters and get the result in some text format (like i.e. JSON).

在最后一步中,将被调用函数的输出发送给ChatGPT, together with the initial prompt. ChatGPT使用自然语言生成最终答案.

So there are 3 steps:

Query ChatGPT to get the function call and its parameters. 以基于JSON的格式发送用户提示和功能规范.

使用ChatGPT提取的参数调用实际的Python函数, which are extracted from the user prompt.

Query ChatGPT with the function output and then initial user prompt to get the final natural language based output.

Example

In our case we have a function with this signature:

def event_search(

search: str,

locality: Optional[str] = "",

country: Optional[str] = "",

tags: List[str] = [],

repeat: bool = True,

) -> str:

...

When the user asks for example:

Can you find events about meditation in the United Kingdom?

ChatGPT将在第一次调用(步骤1)中提取对应的两个函数参数:

search: “meditation”

country: “United Kingdom”

The function will then be called with these two parameters (step 2) and it will then call the REST interface that returns JSON based response. 然后,这个JSON响应将与原始问题一起发送回ChatGPT(步骤3)。.

In step 3 the chat completion API will be called with 3 messages:

系统消息(ChatGPT请求的后台信息)

The original user prompt

The function call with the extracted JSON

Note that the last message has the role “function” and contains the JSON content in the variable “content”. The full code of the function which extracts the parameters for this last call to the ChatGPT completion API can be found here in function extract_event_search_parameters in http://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py

Event Chat Implementation

The Event Chat prototype contains two applications. The first application is the back-end application written in Python and can be found in this repository:

http://github.com/onepointconsulting/event_management_agent

第二个应用程序是用Javascript编写的前端应用程序 React:

http://github.com/onepointconsulting/chatbot-ui

Back-End Code

后端代码由一个基于websocket的服务器组成 python-socketio (implements a transport protocol that enables real-time bidirectional event-based communication between clients) and aiohttp (an asynchronous HTTP Client/Server for asyncio and Python).

主要的web套接字服务器实现可以在这个文件中找到:

处理用户问题的函数如下:

这是在聊天服务器上触发整个工作流的功能. It receives the question from the client, 触发事件搜索并将数据流发送到客户端. 它还确保在发生错误时正确关闭流.

This method calls two other methods:

process_search — this method processes the incoming question up to the point in which the search via the REST API is executed.

aprocess_stream — this method receives the search result and returns an iterable that allows streaming to the client.

These methods are to be found in this file: http://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py

You can find the process_search method here: http://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L115

This method contains first a call to event_search_openai the main function which performs all calls to the OpenAI function calls: http://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L53

The process_search method contains also a call to execute_chat_function which takes the completion_message produced by the OpenAI function and finds the function using the Python’s evalmethod and finally executes the event_search function: http://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L89

This is the method which handles the result of the second call to event_search_openai in process_search. 它大部分时间通过OpenAI API生成的令牌流循环: http://github.com/onepointconsulting/event_management_agent/blob/main/event_management_agent/service/event_search_service.py#L176

Front-End Code

The main component with the chat interface is:

http://github.com/onepointconsulting/chatbot-ui/blob/main/src/components/MainChat.tsx

Apart from rendering the UI it uses a webhook useWebsocket, 其中包含处理基于websocket的事件处理程序的代码 socket.io-client library. useWebsockethook的实现可以在这个文件中找到: http://github.com/onepointconsulting/chatbot-ui/blob/main/src/hooks/useWebsocket.ts

Observations

This project is just meant as a small prototype and a learning experience and could be improved in many ways — especially the user interface would need a better and more professional design and lacks features like e.g. chat history.

Takeaways

如果您有允许您搜索数据的REST接口, 然后,您应该能够使用它们构建聊天界面. ChatGPT的函数调用是在这些搜索界面之上堆叠聊天层的好方法. 你不需要一个非常强大的模型,你可以使用像gpt-3这样的旧模型.5-turbo-16k-0613 and still provide a good user experience.

Writing your own chat user interface is also an interesting experience and allows you to create much more customized implementations — when compared to using projects like e.g Chainlit or Streamlit. Projects like Chainlit 为您提供了大量的功能,并帮助您快速启动和运行, 但在某个阶段限制你的设计选择,以防你做了很多UI定制. Writing your own chat user interface though requires some proficiency with Typescript or Javascript and some UI framework, like ReactJs, VueJs or Svelte.

If you want to have chat streaming, then you should definitely use the web socket protocol which also allows you to keep a chat memory in a rather convenient way, due to its in-built web socket session.

My last takeaway is also that you do not always need to use LangChain whenever you want to interface with LLM models. LangChain 作为许多LLM api之上的一层非常方便,但代价是一个很大的依赖树. 如果你真的知道,你只会使用一个特定的LLM的一个非常具体的功能, then you should perhaps consider not using LangChain.

Gil Fernandes, Onepoint Consulting